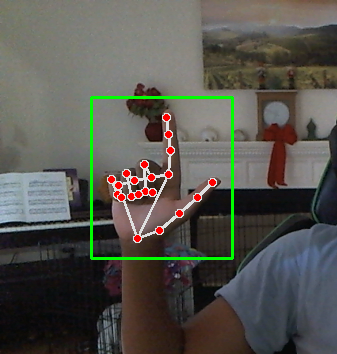

HackerPulse Dispatch Circuit Diagram SLR technology uses AI-driven algorithms to interpret gestures, body movements, and facial expressions into spoken or written language. This process requires complex machine learning models trained on thousands of hours of sign language data. These systems rely heavily on deep learning frameworks to understand subtle nuances, like finger placement or hand motion speed.

The Sign-Lingual Project is a real-time sign language recognition system that translates hand gestures into text or speech using machine learning and OpenAI technologies. It leverages advanced tools like OpenAI's Language Model (LLM) for typo correction and contextual understanding, as well as OpenAI's Whisper for text-to-speech conversion.

based system for interpretation of sign ... Circuit Diagram

Sign language is the only way for deaf and mute people to represent their needs and feelings. Most of non-deaf-mute people do not understand sign language, which leads to many difficulties for deaf-mutes' communication in their social life. Sign language interpretation systems and applications get a lot of attention in the recent years. In this paper, we review sign language recognition and

The AI-powered sign language recognition project aims to develop, implement, and evaluate an advanced system designed to accurately interpret and translate sign language gestures into text or speech. This project involves the selection and analysis of a diverse range of sign language gestures, focusing on various contexts and applications. "By improving American Sign Language recognition, this work contributes to creating tools that can enhance communication for the deaf and hard-of-hearing community," says Dr. Stella Batalama, Dean of the FAU College of Engineering and Computer Science.. Dr. Batalama emphasizes that this technology could make daily interactions in education, healthcare, and social settings more seamless and

Sign Language Recognition: AI as a Bridge for Inclusive Communication Circuit Diagram

The goal of this deep learning project is to create a model for sign language recognition using a convolutional neural network (CNN), utilising the Keras package and OpenCV for live picture capture.